Introduction: Intelligent Bat Detector

Bats emit echo location calls at high frequencies to enable them to 'see' in the dark and we humans need to be able to monitor the species to help prevent destruction of their environment during our ever increasing exploitation of the planet. Recording and analysing bat call audio is a great way to achieve this monitoring, especially if it can be fully automated.

This particular bat detector device can be run off 12 volt batteries and be deployed in the wild for days / weeks at a time with data being transmitted every now and again via a LoRa radio link. Essentially, it records 30 second chunks of audio, does a quick analysis of that audio using machine learning, and then renames the audio file with species, confidence and date if it detected a bat. All other recordings are automatically deleted to save disc space and time for the biologist / researcher.

*These instructions assume you have good Raspberry Pi skills and are able to flash an SD card and use the command line for installing software. Some moderate soldering skills are also required.

Features:

- Full spectrum ultrasonic audio recording in mono at 384 ks per second.

- Results can be displayed in real-time with 30 second delay in either text or spectogram or bar chart format.

- Runs off a 12 V battery or any power supply from 6 to 16 V.

- Software is optimised for power saving and speed by using asynchronous classification.

- Average battery life is about 5 hours using 10 x 1.2 V LiMH AA re-chargeable batteries.

- Automatically classifies the subject in a choice of resolutions eg animal / genus / species.

- Retains data even if it is only 1% confident up to set limit eg 5 GB and then starts deleting the worst of it to prevent data clogging.

- Batch data processing mode can be used for re-evaluating any previous data or new data from other sources.

- Open source software.

- New models for new geographical zones can be trained using the core software.

Supplies

The core ingredients of this project are:

- Raspberry Pi 4 + 4 Gb RAM

- Case and fan

- ADC Pi shield for sensing battery and supply voltages.

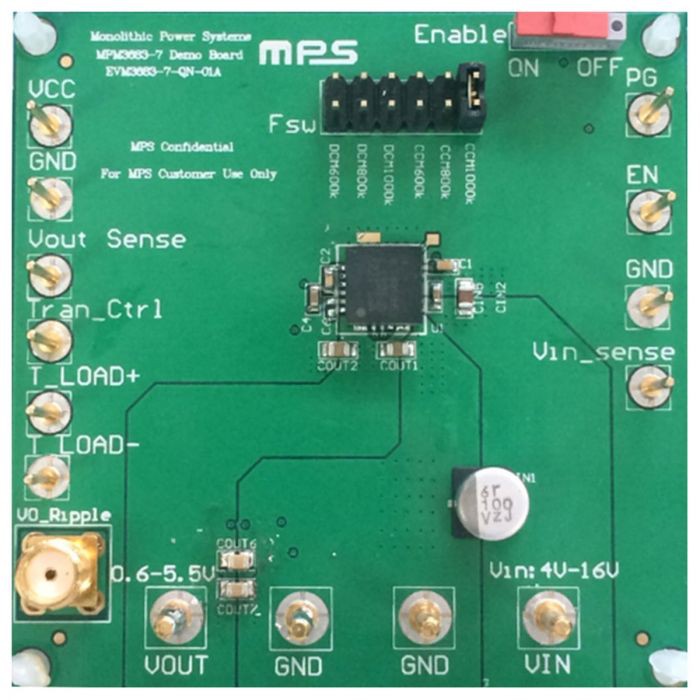

- EVM3683-7-QN-01A Evaluation Board for supplying a steady 5v to the Nano.

- Dragino LoRa GPS hat.

- Adafruit Si7021 temperature and humidity sensor.

- 5 Inch EDID Capacitive Touch Screen 800x480 HDMI Monitor TFT LCD Display.

- 12 V rechargeable battery pack.

- WavX bioacoustics R software package for wildlife acoustic feature extraction.

- Random Forest R classification software.

- In house developed deployment software.

- Full spectrum (384 kb per second) audio data.

- UltraMic 384 usb microphone.

- Waterproof case Max 004.

Small parts:

- 3.3 K wired resister.

- 48.1 K wired resistor.

- 270 0805 SMD resistor, ±0.1% 0.125W

- RS PRO Panel Mount, Version 2.0 USB Connector, Receptacle

Step 1: What Have Been the Key Challenges in Building This Device?

- Choosing the right software. Initially I started off using a package designed for music classification called ' PyAudioAnalysis' which gave options for both Random Forest and then human voice recognition Deep Learning using Tensorflow. Both systems worked ok, but the results were very poor. After some time chatting on this very friendly Facebook group: Bat Call Sound Analysis Workshop , I found a software package written in the R language with a decent tutorial that worked well within a few hours of tweaking. As a rule, if the tutorial is rubbish, then the software should probably be avoided! The same was true when creating the app with the touchscreen - I found one really good tutorial for GTK 3 + python, with examples, which set me up for a relatively smooth ride.

- Finding quality bat data for my country. In theory, there should be numerous databases of full spectrum audio recordings in the UK and France, but when actually trying to download audio files, most of them seem to have been closed down or limited to the more obscure 'social calls'. The only option was to make my own recordings which was actually great fun and I managed to find 6 species of bat in my back yard. This was enough to get going.

- Using GTK 3 to produce the app. Whilst python itself is very well documented on Stack exchange etc, solving more detailed problems with GTK 3 was hard going. One bug was completely undocumented and took me 3 days to remove! The software is also rather clunky and not particularly user friendly or intuitive. Compared to ordinary programming with Python, GTK was NOT a pleasant experience, although it's very rewarding to see the app in action.

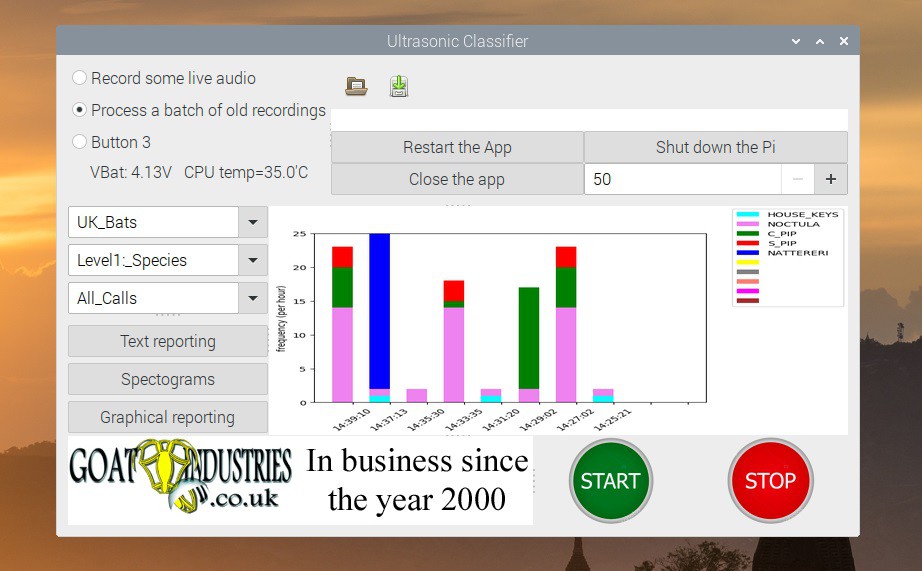

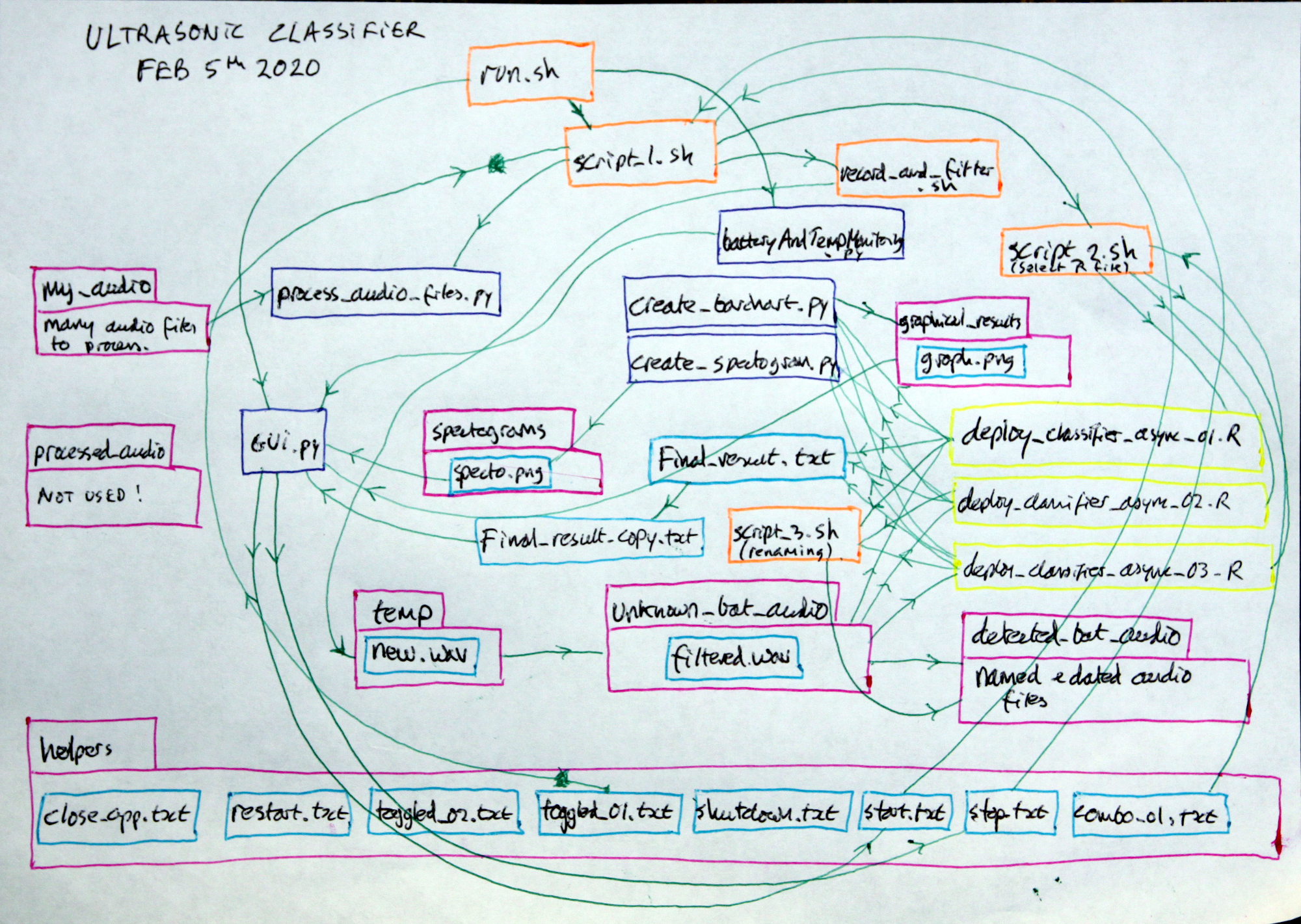

- Designing the overall architecture of the app - GTK only covers a very small part of the app - the touch screen display. The rest of it relies on various Bash and Python scripts to interact with the main deployment script which is written in R. Learning the R language was really not a problem as it's a very generic languages and and only seems to differ in it's idiosyncratic use of syntax, just like any other language really. The 'stack' architecture initially started to evolve organically with a lot of trial and error. As a Hacker, I just put it together in a way that seemed logical and did not involve too much work. I'm far too lazy to learn how to build a stack properly or even actually learn any language, but, after giving a presentation to my local university computer department, everybody seemed to agree that that was perfectly ok for product development. Below is a quick sketch of the stack interactions, which will be pure nonsense to most people but is invaluable to remind myself of how it all works:

- Creating a dynamic barchart - I really wanted to display the results of the bat detection system in the most easy and comprehensive way and the boring old barchart seemed like the way forwards. However, to make it a bit more exciting, I decided to have it update dynamically so that as soon as a bat was detected, the results would appear on the screen. Using spectograms might have been ok, but they're quite hard to read on a small screen, particularly if the bat call is a bit faint. After ten days of trial and error, I got a block of code working in the R deployment script such that it produced a CSV file with all the correctly formatted labels and table data that were comprehensible to another Python script using the ubiquitous matplotlib library to creating a PNG image for GTK to display. The crux of it was getting the legend to automatically self initialise otherwise it would not work when switching to a new data set. Undoubtedly, this has saved a whole load of trouble in the future.

- Parallelism - some parts of the stack, most particularly the recording of live audio, has to be done seamlessly, one chunk after another. This was achieved in Bash using some incredibly simple syntax - the & character and the command wait. It's all done in two very neat lines of code:

arecord -f S16 -r 384000 -d ${chunk_time} -c --device=plughw:r/home/tegwyn/ultrasonic_classifier/temp/new.wav & waitChoosing to use the Bash environment for recording audio chunks was a bit of a no brainer due to the ease of use of the Alsa library and it's ability to record at 384 ks per second. I did not even consider the possibility of doing this any other way. More recently, I realised that some parts of the stack needed to be linear, in that blocks of code needed to run one after the other, and other blocks needed to run concurrently. This was most obvious with the deployment of the Random Forest models in that they only needed to be loaded into memory once per session rather than loading them into memory every time a classification was required. It was actually quite fun to re-organise the whole stack, but required that I documented what every script did and thought really carefully how to optimise it all. The different parts of the stack, written in different languages, communicate with each other by polling various text files in the 'helpers' directory which very often don't even have any contents!

- Finding a decent battery to 5V switching regulator and fuel gauge - It's quite amazing - nobody has yet created a compact 5V power supply that can both monitor the battery state of charge AND deliver a steady 5V from a lead acid battery AND work at a frequency above 384 kHz. Fortunately, after pouring over various datasheets for a day or two, I found one chip made by Monolithic that seemed to meet all the specs. And even more fortuitous was that the company supplied a nice evaluation board at a reasonable price that did not attract customs and handling fees from the couriers. Well done Monolithic - we love you soooooo much! After running a full CPU stress test for 10 minutes, the chip temperature was only 10 degrees above ambient.

Optimising the code for minimal power useage and minimal SD card stress - This involved completely redesigning part of the stack such that the classification scripts, written in R, became asynchronous, which means that, on pressing the start button, the script runs in a continuous loop ever waiting for a new .wav chunk to appear in the 'unknown_bat_audio directory'. The advantage in doing this is that the first part of the script can be isolated as a 'set-up' block which loads all the .rds model files into memory in a one off hit, rather than having to constantly do this for every audio chunk created.

Step 2: Install the Software

- Flash a 128 Gb SD card with the latest Raspberry Pi image using Balena Etcher or such like.

- Boot up the Pi and create a new user called tegwyn (rather than pi) as below:

Open a terminal and run:

sudo adduser tegwyn sudo adduser tegwyn sudo echo 'tegwyn ALL=(ALL) NOPASSWD: ALL' | sudo tee /etc/sudoers.d/010_tegwyn-nopasswd sudo pkill -u pi

Login as tegwyn and run:

git clone https://github.com/paddygoat/ultrasonic_classifie...

- Find the file:

ultrasonic_classifier_dependancies_install_nano.sh

in home/ultrasonic_classifier and open it in a text editor. Follow the instructions within.

- The whole script could be run from a terminal using:

cd /home/tegwyn/ultrasonic_classifier/ && chmod 775 ultrasonic_classifier_dependancies_install.sh && bash ultrasonic_classifier_dependancies_install_nano.sh

- .... Or install each line one by one for better certainty of success.

- Find the file: run.sh in the ultrasonic_classifier directory and edit the password at line 156 accordingly. Also, remove the line:

sudo -S jetson_clocks

at line 6 as this is for a Nvidia Nano only.

- Find the bat icon on the desktop and double click to run the app.

Step 3: Soldering and Wiring

- Plug the AB electronics ADC hat onto the Pi, some extension pins will be required to get above the Pi's case.

- The Pi MUST have a fan, which should be wired to one of the 5 V pins.

- Find R10 on the Monolithic eval board and replace it with a high tolerance 270 ohm 0806 resistor. Check that this now outputs 5.0 V with a volt meter. The eval board will accept up to 16 V input.

- Wire in the power supply with a 48.7 K resistor from the 12 V battery pack to analog pin 1 on the ADC hat.

- Wire the 5 V out to ADC pin 2 via a 3.3 K resistor AND direct to the 5 V pins on the Pi.

- Connect USB and HDMI cables to the touchscreen.

- Set the enable switch on the eval board to 'on'.

Step 4: Record and Classify !!!!

Click on the bat icon on the desktop and the GUI should appear as above. Select 'Record and classify' and hit the 'Graphical reporting button. Then press play and rattle some house keys in front of the mic to test that the system is working. It should display a pink bar on the graph with 'house keys' in the legend after about 30 seconds.

Step 5: Optional Step: Connect to the Cloud

This step is not essential and is still in development.

- Follow the Adafruit tutorial to connect to The Things Network (TTN). This will get data through a local gateway if one is in range, but will not store the data or produce fancy graphs.

- At the end, find the 'Payload Format' tab and paste in this code:

function Decoder(bytes, port) { // Decode an uplink message from a buffer // (array) of bytes to an object of fields. var decoded = {}; if (port === 1) decoded.temp = (bytes[1] + bytes[0] * 256)/100; if (port === 1) decoded.field1 = (bytes[1] + bytes[0] * 256)/100; if (port === 1) decoded.humid = (bytes[3] + bytes[2] * 256)/100; if (port === 1) decoded.field2 = (bytes[3] + bytes[2] * 256)/100; if (port === 1) decoded.batSpecies = (bytes[5] + bytes[4] * 256); if (port === 1) decoded.field3 = (bytes[5] + bytes[4] * 256); return decoded; } - Register with ThingSpeak and find 'Create new Channel' to process the data. Their instructions are very good and it's dead easy!

- Go back to TTN and find the 'Integrations' tab and add ThingSpeak with the appropriate channel ID and write API key from the new ThingSpeak channnel.

- Now use the lora_test_01.py script to send data from the Raspberry Pi.

Attachments

Participated in the

Raspberry Pi Contest 2020